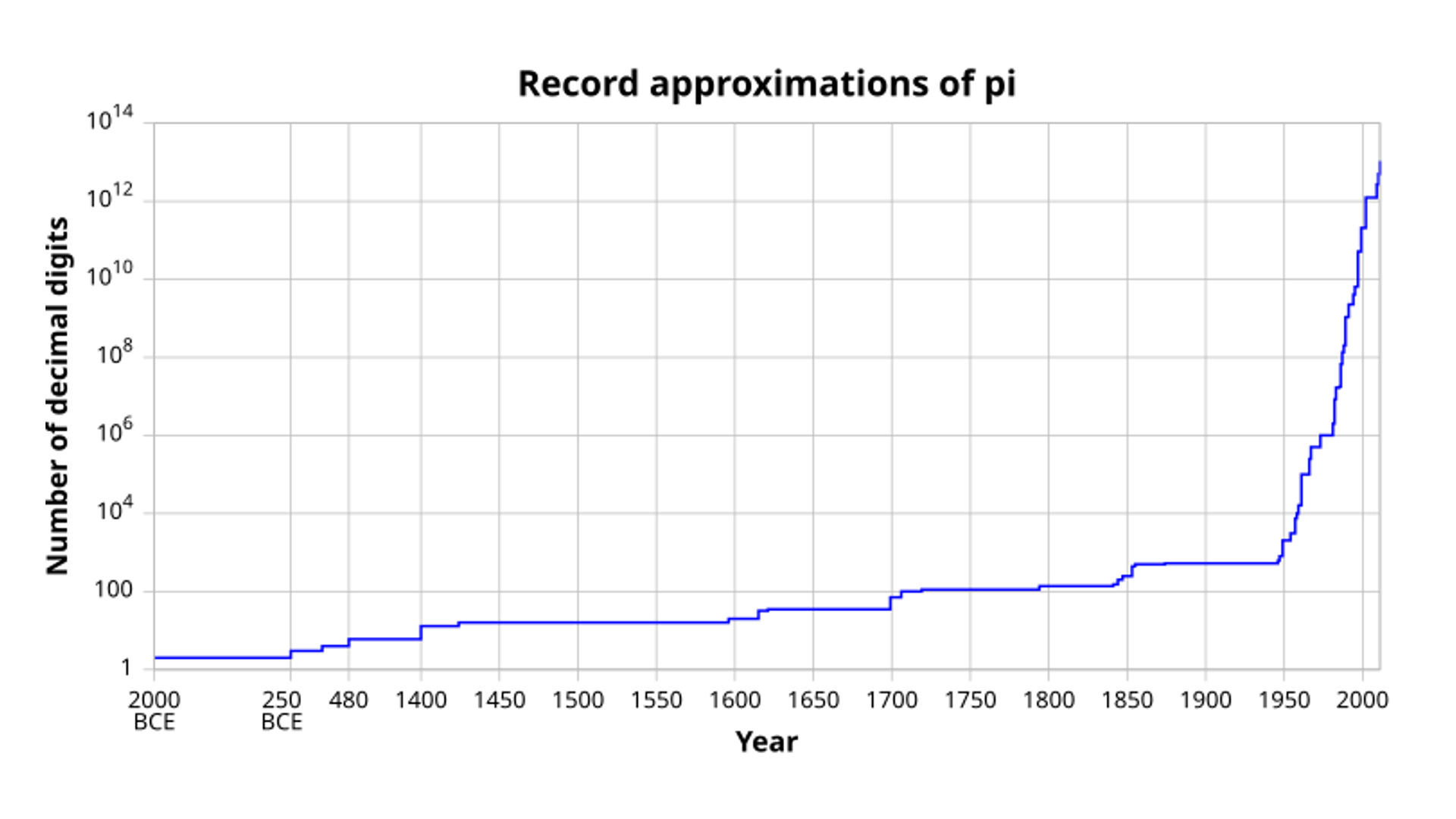

At the start of 2025, a team of researchers shattered the previous world record for computing digits of π (pi), pushing the known boundary of this famous irrational number even further. Using cutting-edge computational techniques, the researchers calculated over 120 trillion digits of pi, surpassing the previous record of 100 trillion digits set in 2022. This achievement is not just about setting records – it has important implications for computer science, numerical analysis, and even physics.

Why keep calculating pi?

Pi, the ratio of a circle’s circumference to its diameter, is one of the most well-known mathematical constants. It is irrational, meaning it has an infinite number of decimal places and never repeats. While most practical applications, such as engineering and physics, only require a few dozen digits of pi, extending its decimal expansion helps test the limits of modern computing. By verifying previously computed digits and pushing new boundaries, mathematicians can uncover errors in algorithms, improve numerical precision, and even test fundamental properties of randomness.

The computational challenge

To calculate trillions of digits of pi, the research team used a combination of high-performance computing (HPC) systems and advanced numerical algorithms. One of the most efficient algorithms for this task is the Chudnovsky algorithm, which rapidly converges to pi using complex mathematical series. However, computing 120 trillion digits requires massive computational power and memory management.

The researchers optimized their software to take advantage of distributed computing, where multiple processors work in parallel to handle calculations. The process took several months of continuous computation, running on a supercomputer with petabytes of storage to handle the enormous amount of data. Every digit needed to be verified to ensure accuracy, as even a tiny error could render millions of computed digits useless.

Implications for science and technology

Beyond being a fascinating mathematical challenge, this record-breaking computation has real-world benefits. Many areas of science and engineering rely on high-precision arithmetic, including simulations in physics, climate modelling, and financial mathematics. The techniques developed to compute massive numbers of pi digits help improve error correction in numerical algorithms, optimize data storage methods, and push forward computational efficiency.

One particularly interesting application is in random number generation. Since pi’s digits appear to be randomly distributed, testing them at extreme scales helps scientists’ study pseudo-randomness and patterns in number theory. Some researchers even investigate whether the digits of pi contain meaningful sequences – though no hidden messages have been found so far!

Could this help prove the normality of pi?

A longstanding open question in mathematics is whether pi is normal, meaning that its digits appear with equal frequency in any base, for example, in base 10, each digit 0-9 should appear about 10% of the time. While no proof exists, computing and analysing vast stretches of pi’s digits provides statistical evidence for its normality. The new 120-trillion-digit dataset will allow mathematicians to test deeper properties of pi’s randomness.

What’s next for pi computation?

With computing power constantly improving, it’s only a matter of time before the next milestone is reached. The main limitation is hardware efficiency and energy consumption – supercomputers require vast amounts of electricity to perform these calculations. Some researchers are exploring ways to use quantum computing to accelerate computations of pi and other mathematical constants.

As mathematicians and computer scientists continue their quest to explore the depths of pi, one thing remains certain – the digits of pi will keep revealing new mathematical insights and pushing the boundaries of computational science. Whether for pure mathematics or technological advancement, this record-breaking achievement in 2025 marks yet another milestone in our never-ending exploration of numbers.